CS 194/294-196 (LLM Agents) - Lecture 2, Shunyu Yao

CS 194/294-196 (LLM Agents) - Lecture 2, Shunyu Yao

Short Summary:

This lecture explores the concept of LLM agents, intelligent systems that use large language models (LLMs) to interact with environments and perform tasks. The lecture traces the historical development of LLM agents, highlighting the evolution from rule-based chatbots to more sophisticated reasoning agents. It discusses how LLM agents can be trained and used to solve various tasks, including question answering, web interaction, and even scientific discovery. The lecture emphasizes the importance of developing robust and reliable LLM agents that can collaborate with humans and perform complex tasks in real-world settings.

Detailed Summary:

1. Introduction to LLM Agents:

- The lecture begins by defining LLM agents as intelligent systems that use LLMs to interact with environments.

- It emphasizes that the concept of "agent" is broad and can encompass various systems, from robots to chatbots.

- The speaker defines "intelligence" as a key aspect of agents, but acknowledges that its definition has evolved over time.

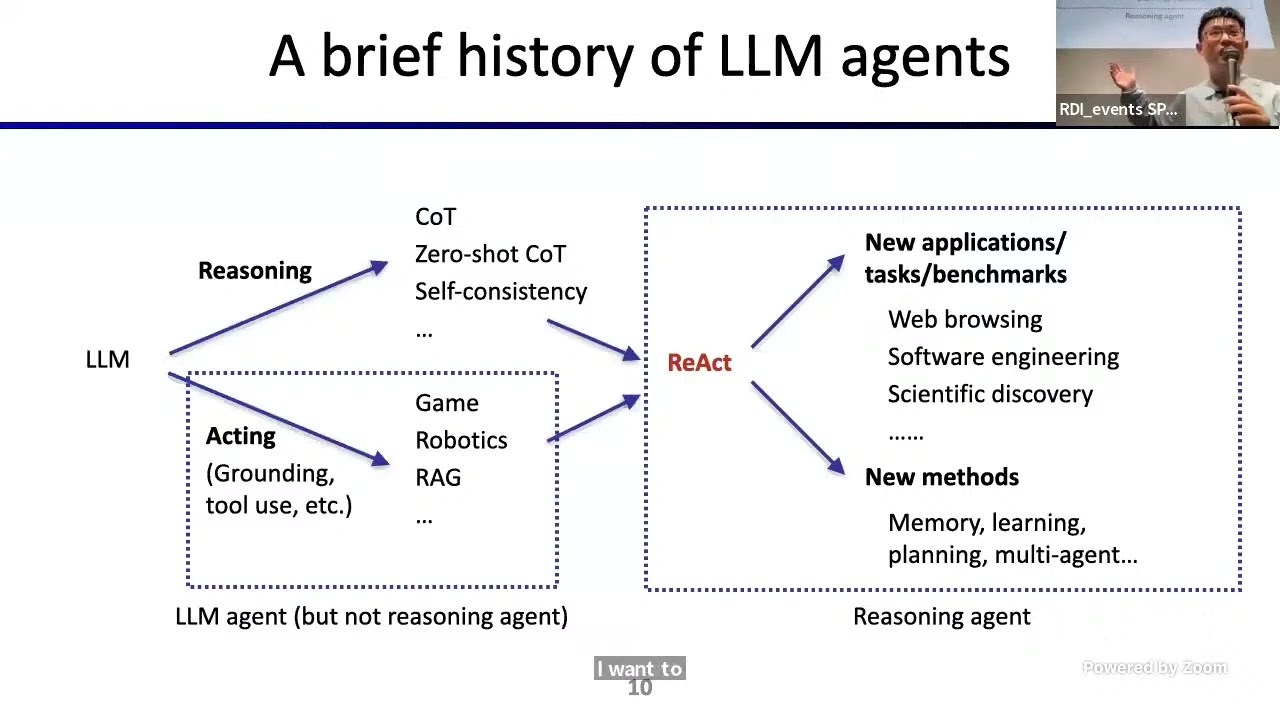

2. Brief History of LLM Agents:

- The lecture traces the history of text agents, starting with rule-based chatbots like ELIZA in the 1960s.

- It then discusses the use of reinforcement learning (RL) to build text agents, but highlights the limitations of both rule-based and RL approaches.

- The speaker argues that LLMs offer a more general and flexible approach to building agents, thanks to their ability to learn from massive text corpora and adapt to new tasks.

3. Paradigm of Reasoning and Acting:

- The lecture introduces two key paradigms: reasoning and acting.

- Reasoning refers to the ability of LLMs to use Chain of Thought reasoning to solve complex problems.

- Acting involves LLMs interacting with external environments, such as search engines, APIs, or physical tools.

- The speaker introduces the concept of "REACT," a framework that combines reasoning and acting, allowing agents to think and act in a more human-like way.

4. REACT: A Framework for Reasoning Agents:

- REACT uses a prompt-based approach, where the agent is given an example task and a trajectory of thoughts and actions to solve it.

- The agent then generates its own thoughts and actions, interacting with the environment and updating its context based on observations.

- The lecture provides a concrete example of REACT solving a question about buying companies using Google search.

- The speaker emphasizes the synergy between reasoning and acting, where reasoning guides actions and actions provide new information for reasoning.

5. Long-Term Memory for LLM Agents:

- The lecture highlights the limitations of short-term memory in LLMs, which are restricted by context window size and attention mechanisms.

- It introduces the concept of long-term memory, which allows agents to store and retrieve information over time, enabling them to learn from past experiences and improve their performance.

- The speaker describes "reflection," a simple form of long-term memory where the agent reflects on its past actions and updates its knowledge based on feedback.

- The lecture also discusses other forms of long-term memory, such as episodic memory and semantic memory, which can be used to store and retrieve different types of information.

6. LLM Agents in the Broader Context of Agents:

- The lecture compares LLM agents to previous paradigms of agents, including symbolic AI agents and deep RL agents.

- It argues that LLM agents are fundamentally different because they use language as an intermediate representation, enabling them to reason and act in a more human-like way.

- The speaker highlights the advantages of language-based agents, including their flexibility, generality, and ability to scale to complex tasks.

7. Applications and Implications of LLM Agents:

- The lecture discusses the potential of LLM agents to automate various tasks in the digital world, including web interaction, software engineering, and scientific discovery.

- It highlights the importance of developing practical and scalable tasks for LLM agents, moving beyond synthetic benchmarks to real-world applications.

- The lecture presents examples of tasks like "Webshop" and "Suben," which involve complex interactions with real-world data and environments.

8. Future Directions for LLM Agents:

- The lecture concludes by discussing five key areas for future research in LLM agents:

- Training: Developing training methods that are specifically tailored for agents, using data generated by prompted agents.

- Interface: Designing better interfaces that are optimized for LLM agents, taking into account their unique capabilities and limitations.

- Robustness: Ensuring that LLM agents are reliable and can perform tasks consistently in real-world settings, especially when interacting with humans.

- Benchmarking: Developing new benchmarks that capture the complexity and nuances of real-world tasks, including human-in-the-loop scenarios.

- Collaboration: Exploring ways for LLM agents to collaborate with humans, leveraging their strengths to achieve common goals.

9. Conclusion:

- The lecture emphasizes the importance of simplicity and generality in research, advocating for the development of simple but powerful frameworks like REACT.

- It highlights the need for abstraction and a broad understanding of different domains to develop effective LLM agents.

- The speaker concludes by expressing excitement about the future of LLM agents and their potential to revolutionize various fields.